Gigahorse GPU Plotter

Gigahorse is a madMAx GPU plotter for compressed k32 plots either fully in RAM with 256G or partially in RAM with 128G.

Other K sizes are supported as well, such as k29 – k34, in theory any K size (if compiled for it). RAM requirements scale with K size, so k33 needs 512G, k30 only needs 64G, etc.

For k30+ at least 8 GB VRAM are required, use -S 3 or -S 2 to reduce VRAM usage (at the cost of performance). The minimum VRAM needed is 4 GB.

Supported GPUs are:

All GPUs for compute capability 5.2 (Maxwell 2.0), 6.0, 6.1 (Pascal), 7.0 (Volta), 7.5 (Turing) and 8.0, 8.6, 8.9 (Ampere).

Which includes: GTX 1000 series, GTX 1600 series, RTX 2000 series, RTX 3000 series and RTX 4000 series

When buying a new GPU, it’s recommended to go for a Turing or newer.

Usage

Usage:

cuda_plot [OPTION...]

-C, --level arg Compression level (default = 1, min = 1, max = 9)

-x, --port arg Network port (default = 8444, chives = 9699, MMX = 11337)

-n, --count arg Number of plots to create (default = 1, -1 = infinite)

-g, --device arg CUDA device (default = 0)

-r, --ndevices arg Number of CUDA devices (default = 1)

-t, --tmpdir arg Temporary directory for plot storage (default = $PWD)

-2, --tmpdir2 arg Temporary directory 2 for hybrid mode (default = @RAM)

-d, --finaldir arg Final destinations (default = <tmpdir>, remote = @HOST)

-z, --dstport arg Destination port for remote copy (default = 1337)

-w, --waitforcopy Wait for copy to start next plot

-p, --poolkey arg Pool Public Key (48 bytes)

-c, --contract arg Pool Contract Address (62 chars)

-f, --farmerkey arg Farmer Public Key (48 bytes)

-Z, --unique Make unique plot (default = false)

-S, --streams arg Number of parallel streams (default = 4, must be >= 2)

-M, --memory arg Max shared / pinned memory in GiB (default =

Important: -t only stores the final plot file, to cache it for final copy.

Important: -2 should be an SSD for partial RAM mode, not a RAM disk.

Important: -M is need on Windows to limit max GPU shared memory, see below.

Note: The first plot will be slow due to memory allocation. Hence -n -1 is the recommended way of plotting with Gigahorse.

Full RAM mode (no -2)

The GPU plotter uses RAM internally, there is no need for a RAM disk. All that’s needed is a -t drive to cache the plots for final copy.

Example with full RAM mode and remote copy:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -d @REMOTE_HOST -p <pool_key> -f <farmer_key>

REMOTE_HOST can be a host name or IP address, the @ prefix is needed to signal remote copy mode.

Example with full RAM mode and local destination:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -d /mnt/hdd1/ -d /mnt/hdd2/ -p <pool_key> -f <farmer_key>

Partial RAM mode (SSD for -2)

To enable partial RAM mode, specify an SSD drive for -2.

Example with partial RAM mode and remote copy:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -2 /mnt/fast_ssd/ -d @REMOTE_HOST -p <pool_key> -f <farmer_key>

REMOTE_HOST can be a host name or IP address, the @ prefix is needed to signal remote copy mode.

Example with partial RAM mode and local destination:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/slow_ssd/ -2 /mnt/fast_ssd/ -d /mnt/hdd1/ -d /mnt/hdd2/ -p <pool_key> -f <farmer_key>

tmpdir2 requires around 150G – 180G of free space for k32, depending on compression level.

The plotter will automatically pause (and resume) plotting if tmpdir is running out of space, which can happen when copy operations are not fast enough. This free space check will fail when multiple instances are sharing the same drive though. In this case it’s recommended to partition the drive and give each plotter their own space.

In case of remote copy, the plotter will automatically pause and resume operation when the remote host goes down or the receiver (chia_plot_sink) is restarted.

Windows

On Windows there is a limit on how much pinned memory can be allocated, usually it’s half the available RAM. You can check the limit in TaskManger as “Shared GPU memory” when selecting the Performance tab on your GPU.

Because of this, it’s required to limit the max pinned memory via -M. For exmaple if your limit is 128 GB, you need to specify -M 128. Unfortunately this will slow down the plotter somewhat, consider using Linux for best performance.

Remote copy

I’ve created a remote copy tool called chia_plot_sink which receives plots over network from one or more plotters and distributes them over the given list of directories in parallel.

Usage:

chia_plot_sink -- /mnt/disk0/ /mnt/disk1/ ...

chia_plot_sink -- /mnt/disk*/

Trailing slashes can be omitted here. Port 1337 is used by default. The tool can be used on localhost as well of course.

Ctrl+C will wait for all active copy operations to finish, while not accepting new connections.

During copy the files have a *.tmp extension, so in the case of a crash they can be easily removed later.

Performance

CPU load is very small, a decent quad-core is fine (2 GHz or more). In case of partial RAM mode SSD speed will be the bottleneck, unless you have 3-4 fast SSDs in a RAID 0. MLC based SSDs work best, like a Samsung 970 PRO, sustained sequential write speed is the most important metric. Partial RAM mode is only recommended for existing setups that don’t support 256G of RAM. Full RAM mode is always cheaper and faster, in case of DDR3 / DDR4.

On PCIe 3.0 systems the bottleneck will be PCIe bandwidth, DDR3-1600 quad-channel is fast enough (no need for DDR4, except for dual-channel systems). On PCIe 3.0 systems a RTX 3060 or 3060 Ti are good enough, anything bigger won’t be much faster. On PCIe 4.0 systems RAM bandwidth will be the bottleneck when paired with a bigger GPU.

To make good use of an RTX 3090 you’ll need PCIe 4.0 together with 256G of quad-channel DDR4-3200 memory (or better).

Multi-Socket Systems

Systems with more than one socket and/or featuring processors that leverage multi-chip module (MCM) designs (e.g. Threadripper and EPYC) or Cluster-on-Die (CoD) technology (e.g. high-core-count Broadwell-EP) can be usually configured with either Uniform Memory Access (UMA) topology—all the memory on the system is presented as one addressable space—or Non-Uniform Memory Access (NUMA) topology—the memory on the system is presented as two or more addressable spaces called nodes. Each NUMA node may additionally contain processor cores, shared caches, and I/O (PCIe lanes).

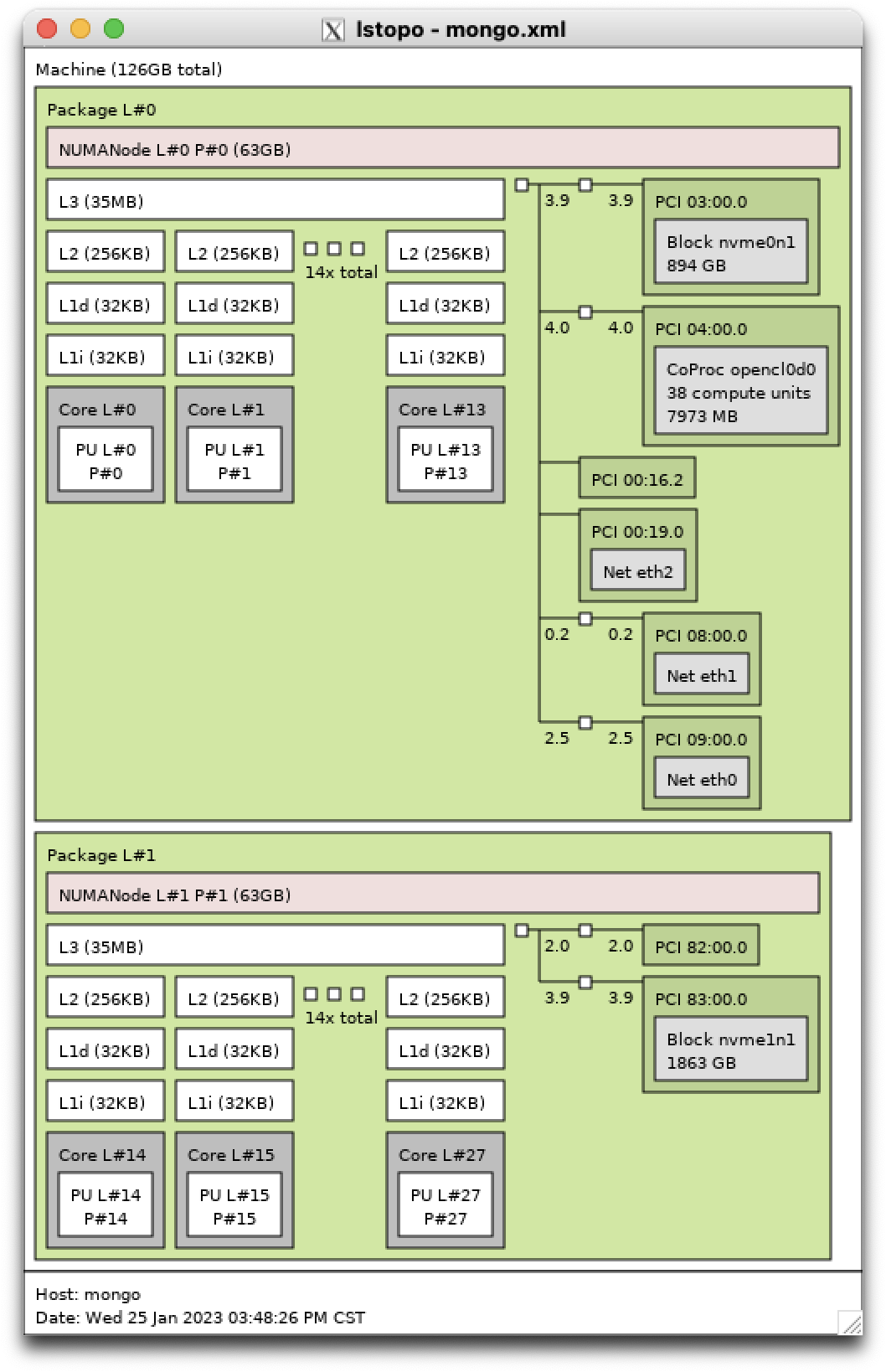

The quickest and easiest way to determine how your system is configured, how many NUMA nodes are present, and which processors, caches, and I/O are assigned to each node is to install hwloc (or hwloc-nox on non-GUI systems) and run lstopo. Here’s an example of a two-socket (2P) system configured for NUMA topology with some PCIe devices and half the system memory present in each node:

UMA topology is easier for users and applications to work with because the system looks and feels like one big computer, but performance suffers whenever data must be transferred between processors—imagine a PCIe device attached to one socket reading from and writing to a region of memory attached to a different socket—and there are limited tools available to mitigate this. NUMA topology is harder to work with because you have to know where everything is and specifically tune your workloads to keep them local, but latency and bandwidth can be greatly improved when it is done correctly using the available tools.

If your system is configurable for either UMA or NUMA topology, you can typically do so from within your system BIOS. Some systems have options specifically to enable or disable NUMA; some systems require you to enable memory channel interleave for UMA or disable memory channel interleave for NUMA. And some systems give you some flexibility to choose how many NUMA nodes you want. For example, many EPYC systems let you disable NUMA altogether or choose the number of NUMA nodes per socket.

It’s recommended to run one GPU per CPU in case of multi-socket machines, while making sure to match each GPU with the correct CPU that’s directly connected, and restricting memory allocations to local RAM.

Example:

numactl -N 0 -m 0 ./cuda_plot_k32 -g 0 ...

numactl -N 1 -m 1 ./cuda_plot_k32 -g 1 ...

If you have a multi-socket machine and only one GPU, but you want the plotter to be able to access all the memory on the system, you can configure it to preferentially allocate memory from the local node (ideally the node containing the GPU, storage devices, etc.) before allocating memory from other nodes:

Example:

numactl -N 0 --preferred=0 ./cuda_plot_k32 ...

Example HP Z420 + RTX 3060 Ti

My test machine is a HP Z420 workstation with a single Xeon E5-2695 v2, 256G (8x32G) of DDR3-1600 memory, 1 TB Samsung 970 PRO SSD, 10G fiber NIC and a RTX 3060 Ti. It only cost around $1500 to build.

Plotting time for k32 and full RAM mode is ~190 sec, or ~170 sec for level 7+ (-D enabled). Plotting time for k32 and partial RAM mode is ~280 sec, or ~250 sec for level 7+, using a 1 TB Samsung 970 PRO for tmpdir and tmpdir2 (-D enabled, half the RAM filled with zeros). Plotting time for k32 and partial RAM mode with two SSDs is ~270 sec, or ~230 sec for level 7+, using a 1 TB Samsung 970 PRO for tmpdir2 and a 1 TB Sabrent Rocket 4.0 for tmpdir (-D enabled, limited to PCIe 3.0, half the RAM filled with zeros).

Gigahorse GPU 绘图仪

Gigahorse 是一款用于压缩 k32 绘图的 madMAX GPU 绘图仪,可以完全在 256G 的 RAM 中或部分在 128G 的 RAM 中。

也支持其他 K 大小,例如 k29 – k34,理论上任何 K 大小(如果为其编译)。RAM 要求随 K 大小而变化,因此 k33 需要 512G,k30 只需要 64G,以此类推。

对于 k30+,至少需要 8 GB VRAM,使用-S 3或-S 2减少 VRAM 使用(以性能为代价)。所需的最小 VRAM 为 4 GB。

支持的 GPU 有:

所有计算能力为 5.2 (Maxwell 2.0)、6.0、6.1 (Pascal)、7.0 (Volta)、7.5 (Turing) 和 8.0、8.6、8.9 (Ampere) 的 GPU。

其中包括:GTX 1000系列、GTX 1600系列、RTX 2000系列、RTX 3000系列和RTX 4000系列

购买新 GPU 时,建议购买 Turing 或更新的 GPU。

用法

Usage:

cuda_plot [OPTION...]

-C, --level arg Compression level (default = 1, min = 1, max = 9)

-x, --port arg Network port (default = 8444, chives = 9699, MMX = 11337)

-n, --count arg Number of plots to create (default = 1, -1 = infinite)

-g, --device arg CUDA device (default = 0)

-r, --ndevices arg Number of CUDA devices (default = 1)

-t, --tmpdir arg Temporary directory for plot storage (default = $PWD)

-2, --tmpdir2 arg Temporary directory 2 for hybrid mode (default = @RAM)

-d, --finaldir arg Final destinations (default = <tmpdir>, remote = @HOST)

-z, --dstport arg Destination port for remote copy (default = 1337)

-w, --waitforcopy Wait for copy to start next plot

-p, --poolkey arg Pool Public Key (48 bytes)

-c, --contract arg Pool Contract Address (62 chars)

-f, --farmerkey arg Farmer Public Key (48 bytes)

-Z, --unique Make unique plot (default = false)

-S, --streams arg Number of parallel streams (default = 4, must be >= 2)

-M, --memory arg Max shared / pinned memory in GiB (default =

重要提示:-t只存储最终的绘图文件,以缓存它以供最终副本使用。

重要提示:-2部分 RAM 模式应该是 SSD,而不是 RAM 磁盘。

重要提示:-M在 Windows 上需要限制最大 GPU 共享内存,请参见下文。

注意:由于内存分配,第一个图会很慢。因此-n -1,推荐使用 Gigahorse 进行绘图。

全 RAM 模式(无-2)

GPU 绘图仪内部使用 RAM,不需要 RAM 磁盘。所需要的只是一个-t驱动器来缓存最终副本的图。

完整 RAM 模式和远程复制的示例:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -d @REMOTE_HOST -p <pool_key> -f <farmer_key>

REMOTE_HOST可以是主机名或 IP 地址,@需要前缀来表示远程复制模式。

具有完整 RAM 模式和本地目标的示例:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -d /mnt/hdd1/ -d /mnt/hdd2/ -p <pool_key> -f <farmer_key>

部分 RAM 模式(SSD 为-2)

要启用部分 RAM 模式,请为 指定一个 SSD 驱动器-2。

部分 RAM 模式和远程复制的示例:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/ssd/ -2 /mnt/fast_ssd/ -d @REMOTE_HOST -p <pool_key> -f <farmer_key>

REMOTE_HOST可以是主机名或 IP 地址,@需要前缀来表示远程复制模式。

部分 RAM 模式和本地目标的示例:

cuda_plot_kxx -x 11337 -n -1 -C 7 -t /mnt/slow_ssd/ -2 /mnt/fast_ssd/ -d /mnt/hdd1/ -d /mnt/hdd2/ -p <pool_key> -f <farmer_key>

tmpdir2k32 需要大约 150G – 180G 的可用空间,具体取决于压缩级别。

如果空间不足,绘图仪将自动暂停(并恢复)绘图tmpdir,这可能在复制操作不够快时发生。但是,当多个实例共享同一驱动器时,此可用空间检查将失败。在这种情况下,建议对驱动器进行分区并为每个绘图仪提供自己的空间。

在远程复制的情况下,当远程主机出现故障或接收器 ( chia_plot_sink) 重新启动时,绘图仪将自动暂停和恢复操作。

Windows

在 Windows 上,可以分配多少固定内存是有限制的,通常是可用 RAM 的一半。选择 GPU 上的性能选项卡时,您可以在 TaskManger 中将限制检查为“共享 GPU 内存”。

因此,需要通过 限制最大固定内存-M。例如,如果您的限制是 128 GB,则需要指定-M 128. 不幸的是,这会稍微降低绘图仪的速度,请考虑使用 Linux 以获得最佳性能。

远程复制

我创建了一个名为远程复制工具的工具chia_plot_sink,它通过网络从一个或多个绘图仪接收绘图,并将它们并行分发到给定的目录列表中。

用法:

chia_plot_sink -- /mnt/disk0/ /mnt/disk1/ ...

chia_plot_sink -- /mnt/disk*/

此处可以省略尾部斜线。默认使用端口 1337。当然,该工具也可以在本地主机上使用。

Ctrl+C 将等待所有活动复制操作完成,同时不接受新连接。

在复制过程中,文件有一个*.tmp扩展名,因此在发生崩溃的情况下,它们可以在以后轻松删除。

表现

CPU 负载非常小,一个像样的四核就可以了(2 GHz 或更高)。在部分 RAM 模式的情况下,SSD 速度将成为瓶颈,除非您在 RAID 0 中有 3-4 个快速 SSD。基于 MLC 的 SSD 效果最好,如三星 970 PRO,持续的顺序写入速度是最重要的指标。部分 RAM 模式仅推荐用于不支持 256G RAM 的现有设置。对于 DDR3 / DDR4,全 RAM 模式总是更便宜、更快。

在 PCIe 3.0 系统上,瓶颈将是 PCIe 带宽,DDR3-1600 四通道足够快(不需要 DDR4,双通道系统除外)。在 PCIe 3.0 系统上,RTX 3060 或 3060 Ti 就足够了,任何更大的东西都不会更快。在 PCIe 4.0 系统上,当与更大的 GPU 配对时,RAM 带宽将成为瓶颈。

要充分利用 RTX 3090,您需要 PCIe 4.0 以及 256G 四通道 DDR4-3200 内存(或更好)。

多路系统

具有多个插槽和/或具有利用多芯片模块 (MCM) 设计(例如 Threadripper 和 EPYC)或 Cluster-on-Die (CoD) 技术(例如高核数 Broadwell-EP)的处理器的系统可以通常配置有统一内存访问 (UMA) 拓扑——系统上的所有内存都显示为一个可寻址空间——或非统一内存访问 (NUMA) 拓扑——系统上的内存显示为两个或多个可寻址空间,称为节点。每个 NUMA 节点可能还包含处理器内核、共享缓存和 I/O(PCIe 通道)。

确定系统配置方式、存在多少 NUMA 节点以及为每个节点分配了哪些处理器、缓存和 I/O 的最快和最简单的方法是安装hwloc(或hwloc-nox在非 GUI 系统上)并运行lstopo. 以下是为 NUMA 拓扑配置的双路 (2P) 系统示例,其中包含一些 PCIe 设备和每个节点中存在的一半系统内存:

UMA 拓扑对于用户和应用程序来说更容易使用,因为系统看起来和感觉起来就像一台大型计算机,但只要数据必须在处理器之间传输,性能就会受到影响——想象一下连接到一个插槽的 PCIe 设备读取和写入内存区域连接到不同的套接字——并且只有有限的工具可以用来缓解这种情况。NUMA 拓扑更难使用,因为您必须知道一切都在哪里,并专门调整您的工作负载以将它们保持在本地,但如果使用可用工具正确完成,延迟和带宽可以大大改善。

如果您的系统可针对 UMA 或 NUMA 拓扑进行配置,您通常可以在系统 BIOS 中进行配置。一些系统有专门用于启用或禁用 NUMA 的选项;某些系统要求您为 UMA 启用内存通道交错或为 NUMA 禁用内存通道交错。一些系统为您提供了一些灵活性来选择您想要的 NUMA 节点数。例如,许多 EPYC 系统允许您完全禁用 NUMA 或选择每个插槽的 NUMA 节点数。

建议在多路机器的情况下为每个 CPU 运行一个 GPU,同时确保将每个 GPU 与直接连接的正确 CPU 相匹配,并将内存分配限制为本地 RAM。

例子:

numactl -N 0 -m 0 ./cuda_plot_k32 -g 0 ...

numactl -N 1 -m 1 ./cuda_plot_k32 -g 1 ...

如果你有一台多路机器,只有一个GPU,但你希望绘图仪能够访问系统上的所有内存,你可以配置它优先从本地节点分配内存(最好是包含GPU的节点,存储设备等)在从其他节点分配内存之前:

例子:

numactl -N 0 --preferred=0 ./cuda_plot_k32 ...

示例 HP Z420 + RTX 3060 Ti

我的测试机器是 HP Z420 工作站,配备单个至强 E5-2695 v2、256G (8x32G) DDR3-1600 内存、1 TB 三星 970 PRO SSD、10G 光纤网卡和 RTX 3060 Ti。它的建造成本仅为 1500 美元左右。

k32 和全 RAM 模式的绘图时间约为 190 秒,或 7+ 级(-D启用)约为 170 秒。k32 和部分 RAM 模式的绘图时间约为 280 秒,或 7+ 级约为 250 秒,使用 1 TB 三星 970 PROtmpdir和tmpdir2(-D启用,一半的 RAM 填充零)。使用 1 TB Samsung 970 PROtmpdir2和 1 TB Sabrent Rocket 4.0 和 1 TB Sabrent Rocket 4.0 为tmpdir(-D启用,限于 PCIe 3.0,一半RAM 中填满了零)。

Qubic挖矿教程网

Qubic挖矿教程网